|

Systems of random variables Essential interest in mathematical statistics is represented with consideration of system of two and more random variables and their statistical interrelation with each other.

By analogy to series of distribution for one discrete random variable

X

for two discrete random variables

X

and

Y

matrix of distribution

is under construction; it is rectangular table in which all probabilities

p

ij

=

P

{

X = x

i

,

Y = y

j

},

i

= 1, … ,

n

;

Events (or experiences) are called independent if probability of occurrence (outcome) of each of them does not depend on what events (outcomes) took place in other cases (experiences).

Two random variables

X

and

Y

are called independent if all events connected with them are independent: for example,

In terms of distribution laws is fairly also the following definition: two random variables X and Y are called independent if distribution law of each of them does not depend on accepted value of another. Joint function of distribution of system of two random variables ( X , Y ) is called probability of joint performance of inequalities X < х and Y < у :

Event

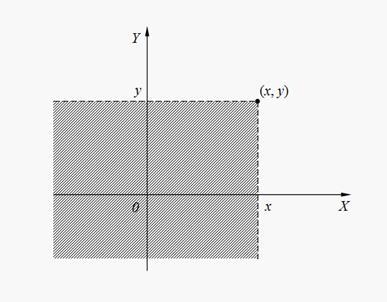

Geometrical interpretation of joint function of distribution F ( x , y ) is probability of hit of random point ( X , Y ) on plane inside of infinite quadrant with top in a point ( x , y ) (the shaded area on Fig. 8).

Fig. 8. Geometrical interpretation of joint function of distribution F ( x , y )

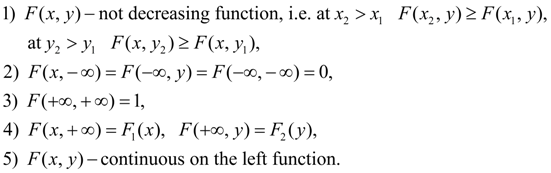

Basic properties of joint function of distribution:

Here

System of two random variables (

X

,

Y

) is called

continuous

system, if its joint function of distribution

F

(

x

,

y

) is continuous function differentiated on each argument and which has the second mixed private derivative

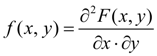

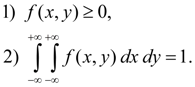

is called joint density function of system of two random variables ( X , Y ). Basic properties of joint density function:

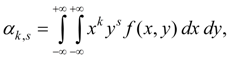

As numerical characteristics of system of two random variables X and Y initial and central moments of various orders are usually considered. Order of moment is sum of its indexes k + s . Initial moment of the order k + s of system of two random variables X and Y is called mathematical expectation of product X k on Y s :

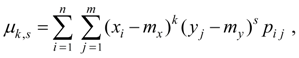

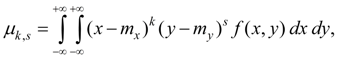

Central moment of the order k + s of system of two random variables X and Y is called mathematical expectation of product ( X – m x ) k on ( Y – m y ) s :

where m x = М ( Х ), m y = М ( Y ). For system of discrete random variables X and Y :

where р i j = Р { Х = x i , Y = y i }. For system of continuous random variables X and Y :

where f ( x , y ) – joint density functionof system of random variables X and Y . In engineering applications of mathematical statistics moments of the first and the second orders more often are used. Initial moments of the first order

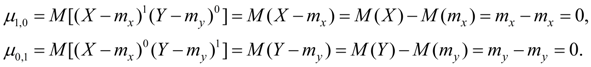

are mathematical expectations of random variables X and Y . Central moments of the first order are always equal to zero:

Initial moments of the second order

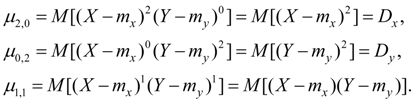

Central moments of the second order:

Here D x , D y – dispersions of random variables X and Y .

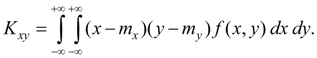

The central moment of the second order

From definition of covariance (48) follows:

The dispersion of random variable is in essence a special case of covariance:

From definition of covariance (48) we’ll receive:

Covariance of two random variables X and Y characterizes a degree of their dependence and a measure of dispersion around of point

Expression (52) follows from definition of covariance (48). Dimension of covariance is equal to product of dimensions of random variables X and Y . Dimensionless value describing only dependence of random variables X and Y , but not spreading:

is called coefficient of correlation of random variables X and Y .

This parameter characterizes a degree of

linear

dependence of random variables

X

and

Y

. For any two random variables

X

and

Y

coefficientof correlation

|

Contents

>> Applied Mathematics

>> Mathematical Statistics

>> Elements of Mathematical Statistics

>> Systems of random variables

. Both random variables

X

and

Y

– are continuous. Then function

. Both random variables

X

and

Y

– are continuous. Then function